In brief

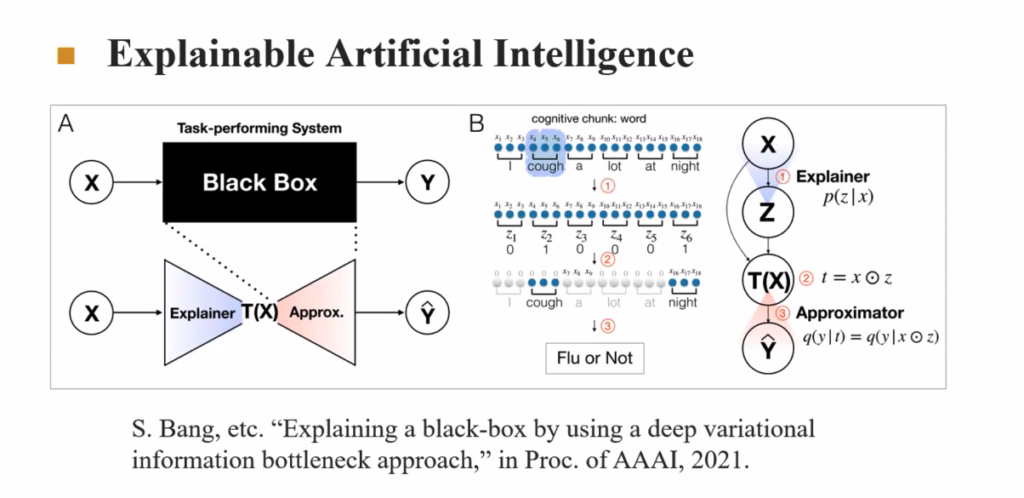

Explainable artificial intelligence (XAI) is the process of making the outcome of non-linearly programmed systems more transparent, in an effort to avoid “black-box” processes. XAI in Computer Science is defined by tools and frameworks to help in understanding and interpret predictions made by the various machine learning models.

Libraries

The most commonly used methods and libraries for AI explainability in Python are:

1. LIME (Local Interpretable Model-Agnostic Explanations)

LIME explains individual predictions by perturbing the input data and observing how the model’s predictions change. Works with most models for tabular, text and image *processed data.

#pip install lime

from lime import lime_tabular

explainer = lime_tabular.LimeTabularExplainer(training_data, feature_names=feature_names, class_names=class_names, mode='classification')

explanation = explainer.explain_instance(instance, model.predict_proba)

explanation.show_in_notebook()2. SHAP (SHapley Additive exPlanations)

SHAP is based on cooperative game theory and provides consistent and accurate feature importance scores for individual predictions. SHAP provides both global and local interpretability and works with many types of models (e.g., tree-based models, deep learning, etc.).

#pip install shap

import shap

explainer = shap.TreeExplainer(model) # estimate SHAP values for tree models and ensembles of trees. More available for inference

shap_values = explainer.shap_values(X)

shap.summary_plot(shap_values, X)3. ELI5 (Explain Like I’m 5)

ELI5 provides simple ways to inspect machine learning models and explain their predictions.

#pip install eli5

import eli5

from eli5.sklearn import PermutationImportance

perm = PermutationImportance(model, random_state=1).fit(X_test, y_test)

eli5.show_weights(perm, feature_names=feature_names)4. Alibi

Alibi is a framework for explainability and adversarial robustness in machine learning models. It includes various algorithms like Anchors, Counterfactuals, and SHAP.

#pip install alibi

from alibi.explainers import AnchorTabular

explainer = AnchorTabular(predict_fn=model.predict, feature_names=feature_names)

explainer.fit(X_train, disc_perc=[25, 50, 75])

explanation = explainer.explain(instance)

explanation.show_in_notebook()5. InterpretML

InterpretML is an explainability tool from Microsoft. It provides glass-box models like Explainable Boosting Machine (EBM) as well as model-agnostic methods like SHAP.

#pip install interpret

from interpret.glassbox import ExplainableBoostingClassifier

from interpret import show

ebm = ExplainableBoostingClassifier()

ebm.fit(X_train, y_train)

explanation = ebm.explain_global()

show(explanation)6. Fairness and Interpretability in TensorFlow Models

TensorFlow/Keras, has some built-in tools to evaluate fairness and explainability:

• TensorFlow Model Analysis (TFMA): Evaluates model fairness and performance.

• TensorBoard: Visualizes model metrics, embeddings, and more.

import tensorflow_model_analysis as tfma

eval_config = tfma.EvalConfig(

model_specs=[tfma.ModelSpec(label_key='label')],

slicing_specs=[tfma.SlicingSpec()],

metrics_specs=[tfma.MetricsSpec()]

)7. Captum (PyTorch)

Captum is a model interpretability library built specifically for PyTorch models. It provides tools to understand how neural networks behave. Captum includes algorithms like Integrated Gradients, Saliency Maps, and more, tailored for neural networks

#pip install captum

from captum.attr import IntegratedGradients

ig = IntegratedGradients(model)

attr = ig.attribute(input_tensor, target=0)8. Partial Dependence Plots (PDP) and Individual Conditional Expectation (ICE)

PDP and ICE plots are visualization tools used to understand the impact of individual features on the model’s predictions.

from sklearn.inspection import plot_partial_dependence

plot_partial_dependence(model, X_train, features=[0, 1])n the following table a more complete list with additional properties of each method is outlined

| Method/Library | Type | Models Supported | Focus |

| LIME | Model-agnostic | Any | Local explanation |

| SHAP | Model-agnostic and model-specific | Any | Local & Global explanation |

| ELI5 | Model-agnostic and model-specific | scikit-learn, XGBoost, Keras, etc | Feature importance and debugging |

| Alibi | Model-agnostic and model-specific | Any | Anchors, counterfactuals, adversarial examples |

| InterpretML | Model-agnostic and glass-box models | Any | Global & Local explanation |

| AIX360 | Model-agnostic and model-specific | Any | Diverse explanation algorithms |

| Skater | Model-agnostic and model-specific | Any | Global & Local explanation |

| Captum (PyTorch) | Model-specific | PyTorch | Neural networks (NNs) |

| DeepLift | Model-specific | Neural Networks | Deep Learning explanation |

| PDP/ICE | Model-agnostic | Any | Visualization-based |